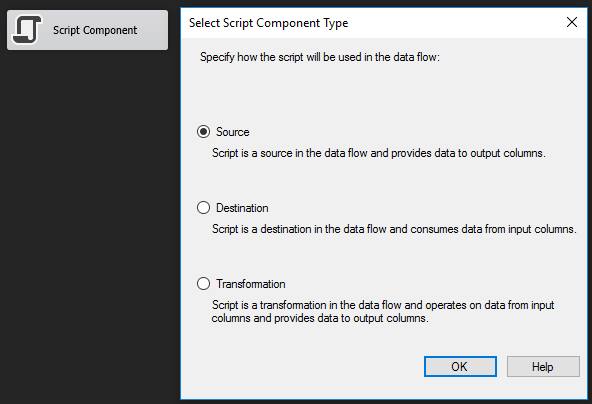

SSIS does not have a built in source component for JSON data, so you’ll have to use the Script Component. When you drag the Script Component into a data flow, you’ll be asked how you want to use it. In this case you’ll use it as a source.

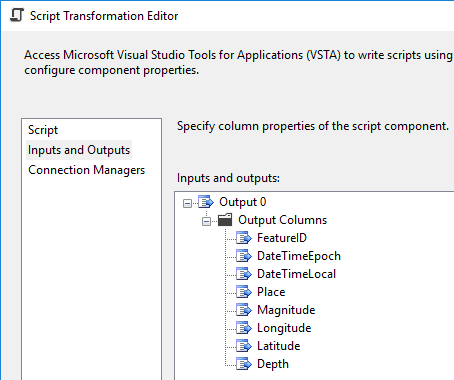

Define Output Columns

Once the Script Component has been defined as a source, the output columns can be defined. For this post, USGS Earthquake data will serve as an example. Be careful to choose the correct data types here. This can be tedious because you have to choose the correct data types in the C# code as well, and ensure that they correspond with the SSIS types. It’s helpful to bookmark a SSIS data type translation table for reference.

Editing the Script Component

With the output columns defined, it’s time for the fun stuff - the C# code. I’m assuming the reader has some experience with the Script Component and C#, so I’m not going to go through everything in great detail. I’ll highlight the main steps below and provide a link to the complete C# script at the end of this post.

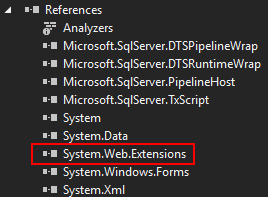

In the script editor, add a reference to System.Web.Extensions.

Generate C# classes from JSON source data. quicktype is a great tool for this. These C# classes are the blueprints for converting JSON to .NET objects.

Download the JSON data using the WebClient class.

public static string DownloadJson(string downloadURL)

{

using (WebClient client = new WebClient())

{

return client.DownloadString(downloadURL);

}

}

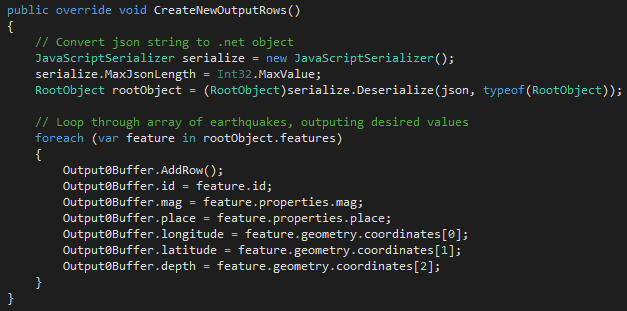

Deserialize the JSON string and output the desired data to the SSIS buffer. Some developers may scoff when they see the old school JavaScriptSerializer class, but using this class avoids having to load a 3rd party library like Json.NET into the Global Assembly Cache (GAC) on the server where SSIS runs. If you need to use Json.NET, you’ll likely have to jump through hoops with security and or server administrators to get it installed.

public override void CreateNewOutputRows()

{

// Convert json string to .net object using the old school JavaScriptSerializer class

JavaScriptSerializer serialize = new JavaScriptSerializer();

Earthquakes earthquakes = (Earthquakes)serialize.Deserialize(json, typeof(Earthquakes));

// Loop through array of earthquakes, outputing desired values to SSIS buffer

foreach (var feature in earthquakes.features)

{

Output0Buffer.AddRow();

Output0Buffer.FeatureID = feature.id;

Output0Buffer.DateTimeEpoch = feature.properties.time;

Output0Buffer.DateTimeLocal = epoch.AddMilliseconds(feature.properties.time).ToLocalTime();

Output0Buffer.Magnitude = feature.properties.mag;

Output0Buffer.Place = feature.properties.place;

Output0Buffer.Longitude = feature.geometry.coordinates[0];

Output0Buffer.Latitude = feature.geometry.coordinates[1];

Output0Buffer.Depth = feature.geometry.coordinates[2];

}

}

To test the script, add a Union All transformation with a data viewer on the path.

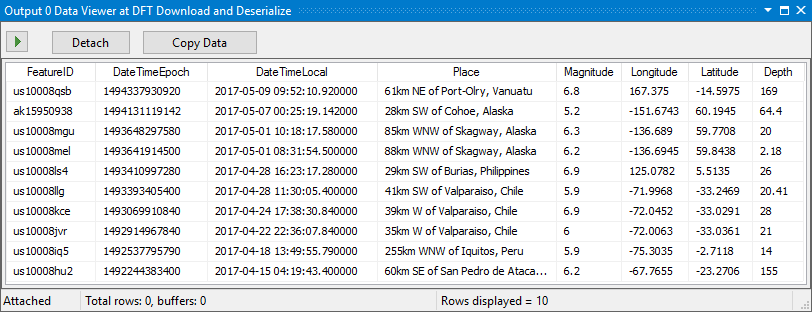

When executing the package, the results displayed in the data viewer should look something like this:

From here, you can use SSIS transformations to manipulate the data or it can be loaded directly into a destination.

Other Thoughts

With new projects using SQL Server 2016 and later, I default to using the SQL Server JSON functions for ingesting JSON. See my post Load JSON into SQL Server Using a Stored Procedure and C# for details.